On how Near the Singularity Really Is

A commentary of Ray Kurzweil’s latest book, The Singularity Is Near

Alex Backer, 6/4/2006 (edited March 2010)

If verging on the religious (on the first page of Chapter One, Kurzweil takes the trouble to explain that he regards ‘someone who understands the Singularity and who has reflected on its implications for his or her own life as a “singulitarian”), Kurzweil’s latest book makes a fascinating read.

To a certain extent, the basic premise of the book is self-evident: that the pace of progress accelerates over time. There are multiple reasons for this, including that more people are alive today than at any time in the past, and that previous inventions facilitate the next one. Kurzweil extends this trend to the limit, and infers that at some point this accelerating speed becomes so much it becomes a 'singularity': a unique discontinuity in a timeline.

Kurzweil argues against a bottleneck in human processing speed becoming limiting, arguing that computers will get us out of it, but a) the bottleneck could be reached before we develop Ultraintelligent Machines, and b) if Ultraintelligent machines are built for people, then some of their effects will be subject to human timescales.

Although Kurzweil appears fascinated by exponential growth, exponential change is in many ways ‘the norm’: exponential increases in several performance metrics is what happens if you have linear scientific output. Nobody is interested in inventing a method today that augments computer memory by 2 kb, even though that improvement doubled my first computer’s memory some twenty-something years ago. An improvement similar to that (for example, finding a way to fit twice as many chips in the same space) leads to exponential growth by definition. The more things change, the more they stay the same. Indeed, measures of scientific output show that, at least by ordinary measures such as number of papers published per unit time, science stopped growing exponentially many decades about (Boyack and Bäcker, 2003). And although one could argue that papers today contain more information that papers in the past (indeed, we have papers today that present findings on entire genomes, while a study of a single gene was newsworthy not many years ago), it is hard to find any paper in the last fifty years of biology as influential as Watson and Crick’s 1953 paper elucidating the structure of DNA, for example. One could also conclude that the linear increase in scientific throughput reflects a deficiency of the scientific publishing system. But while the system certainly deserves a rehaul, it remains the primary way in which science is communicated, experiments in scientific publishing aside (Bäcker, 2006).

So it’s really on superexponential growth processes (some of which Kurzweil describes) that he should focus on.

Not only is the cause of exponential growth in, say, computing power, a linear process, but its consequences are linear, too. Our life becomes only marginally better by a 2 kb increase in memory or a 2 kHz today, but changes of the same (linear) magnitude made a more significant effect when they constituted a doubling. Our senses detect logarithms of values, not the values themselves, and so it takes exponential change to effect linear perception.

The importance of the opposable thumb is often overstated, and Kurzweil is no exception. Monkeys are able to manipulate their environment (see video), and seem more dexterous by many measures with any limb than we are with our hands.

Data may be doubling every year as he claims, but useful knowledge is not.

Despite taking the trouble to carefully define Singularitarian, Kurzweil’s book is rather unclear on what he means by the Singularity itself: “a future period during which the pace of technological change will be so rapid, its impact so deep, that human life will be irreversibly transformed”. Without a definition of what constitutes ‘so rapid’, ‘so deep’ and ‘irreversible transformation’, it’ll be hard to know when we’re there; one could reasonably argue that human life has already been irreversibly transformed many times, by changes in our genetic heritage, by fire, by writing and more.

Not that it’s impossible to define Singularity precisely. Indeed, Kurzweil cites two legitimate definitions in his book (p. 10) –although neither uses the word Singularity. One, by Anissimov; the other, by the legendary John von Neumann.Now the interesting thing about this is that both definitions describe two completely different phenomena. One is the empirical observation of the acceleration of technological development, which suggests that technological progress must either continue to accelerate to the point where society is being transformed at a whirlwind pace or slow its acceleration. The other is the theoretical prediction that when machines become able to improve themselves on their own, the pace of progress will accelerate tremendously.

While I would rather agree with Anissimov that a discontinuity is likely when the first transhuman intelligence is created that launches itself into recursive self-improvement, this is not necessarily the same as the creation of a super-human intelligence. And while the two may happen in close temporal proximity, it would be useful to clarify what the actual requirements for a Singularity are. It seems to me that the former is directly conducive to a Singularity, and is likely to happen before the latter.

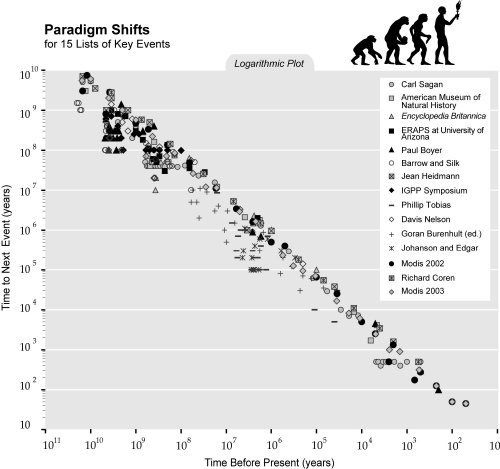

Kurzweil’s hypothesis rests primarily on his argument for an exponentially increasing rate of paradigm shifts. Unfortunately, his plots all end 50 years ago. Below is a plot of Kurzweil’s Countdown to Singularity figure. Now extend that to the present. Assuming merely that the exponential acceleration that Kurzweil describes in the past has continued for the last 50 years. If he is correct, we should be able to see a large number of paradigm shifts as impactful as the advent of writing in the last fifty years. Indeed, we should be currently seeing a paradigm shift as impactful as that approximately every year. If someone can tell me where they are, I would like to invest in them. Will the stock market go up with the tremendous rate of value creation sure to come from these ever-more-frequent paradigm shifts? We should all be investing in NASDAQ then. Personally, I have no doubt that the Internet is one such paradigm shift of the last 50 years. The sequencing of the genome, or the ability to inexpensively and rapidly sequence genomes, is probably another. But I am not yet seeing one a year --not of the relevance of the invention of writing or the wheel.

In his discussion on complexity (p. 38), Kurzweil argues that a phone book should have a low complexity with any reasonable definition, while something like a brain a high one, so he argues for not counting ‘arbitrary data’ in counting complexity. Yet it seems to me that the data in a phone book, linking people with physical addresses and addresses in telephone space, is both as useful and as ‘arbitrary’ as the patterns that make up the complexity of the brain, namely which neurons are connected to which. If mathematical equations equate different ways to arrive at the same quantity, the phonebook can be said to contain ‘equations’ providing alternative ways of reaching the same person, in physical space and in telephone space. If we knew the ‘phonebook of the brain’, so to speak, its connectivity, we might well know most of what we need to know about it to understand how it works, e.g. its input/output mapping. Thus, while Kurzweil’s discounting of randomness seems an improvement over Gell-Mann’s definition of complexity, his discounting of ‘arbitrary’ data seems of little use without a definition of ‘arbitrary’: data, or information, is what complexity is all about.

Kurzweil seems to have missed the biggest thing that allows the genome to contain much less information and complexity than a human: the fact that the rest of the information is encoded in experience. But, while this is very efficient for information transfer, something that got selected for in bacteria where time to replicate the genome is a constraint in propagation speed for a lineage, it is very inefficient at transferring information across generations. Technology will be vastly superior to humans in their speed and ability to learn from other systems’ experience.

I write this on a train in Austria, as I speed away from the streets of Salzburg, where centuries-old inscriptions in centuries-old buildings remain unbothered by the passage of time, even in what is arguably the most uniformly technologically up-to-date continent on Earth. So it seems hard to believe Kurzweil’s claim that life as we know it will cease to exist in the next fifty years, except in the way that it has already been transformed through the centuries: through incremental improvements in technology . It’s exciting enough to ponder the possibility, though. I hope I get to hang around long enough to find out.

Kurzweil’s chapter entitled ‘A Theory of Technology Evolution’ seems to me fascinating, but more of an empirical study than a theory. The big question that stands out to me from it is, why are so many disparate trends so closely following an exponential growth curve over many years (the goodness of fit for many of these curves is striking)? Why does technology growth in any one measurement (such as computational power of computers as a function of time) obey an exponential curve? The answer is given above: Humans work hard enough to improve things by a perceptible amount, and perception is logarithmic: it detects variations not as absolute amounts, but as a fraction of the previous value.